Last night one of my friends in IBM asked my opinion about the Exchange Client Network Bandwidth Calculator (it's

here). I gave him my response, after I had downloaded and played with it. Here it is.

Since 10 years, my work and passion is all about analyzing network traffic for large Lotus Domino customers, I have detailed network and usage statistics for more than a million Notes users in my DNA data warehouse. And I maintain benchmarks to compare customers against. So I think I can judge.

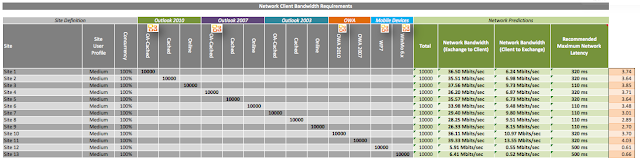

From a first look and play with this 2012 calculator spreadsheet, it looks pretty complete in the sense that Microsoft have incorporated a lot of variables to represent end user behavior. Now let's see what happens if we run a couple of scenario's:

Scenario A: 10,000 users with a Medium Profile

- Leave all parameters on their initial default values in the worksheet named 'Input';

- In the worksheet named 'Client Mix', specify all users to have a Medium Profile at each site and in such a manner that every version and type of Outlook is assigned 10,000 users;

- Add a column to the right where you divide the resulting bandwidth by the number of users, thus calculating the kilobits per user: (=1024*Table47[@[Network Bandwidth )

|

| Click to enlarge |

Recommended network bandwidth is appr. 4 kilobits per second, per user.

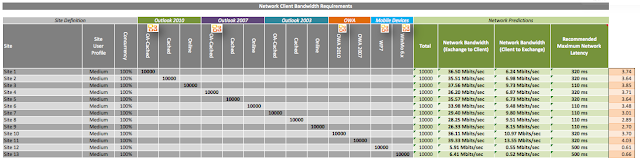

Scenario B: 10,000 users with a Very Heavy Profile

- Again leave the parameters to their initial values;

- Change the Site User Profile to 'Very Heavy';

|

| Click to enlarge |

Recommended network bandwidth ranges from 6.5 to 11.75 kilobits per second, per user.

My observation:

Let's apply the Microsoft Recommendations to the network bandwidth requirements I reported to an organization with 9,500 users and 101 office locations world wide.

|

| Click to enlarge |

The blue bars are network demand levels observed over a 7 day period, as a consequence of measuring the real end user demand in those locations. The two red lines come from Microsoft's estimates for Medium and Very Heavy user profiles, that I applied to the user count in each of the office locations.

Of the 101 office locations, Microsoft's estimations would result in a severe shortage of network capacity for 6 locations, even when applying Microsoft's most heavy profile estimates.

Would we follow Microsoft's Calculator for Medium Profiles, more than 50% of all office locations would end up with severe shortage in their network capacity. For the remaining locations, the customer would end up with significant over capacity (56% to be exact, averaged across all sites).

Why is the Microsoft Calculator giving wrong estimates for almost every site?

Do the default user profiles not represent the behavior of users at my customer properly? Do the users in my customer send and receive more messages, and are these messages perhaps smaller or larger than what Microsoft put in their profiles? I don't think that matters. Putting more load per user profile would lead to even more over capacity at half of the sites, while still leading to shortage in others.

The real problem with this calculator is putting people into profiles. You cannot predict end user behavior, you can only observe what they are doing today. It's ok to classify your observations into profiles and categories to e.g. present pretty pictures. But it is very dangerous to use those classifications and generalize entire user populations for sizing purposes.

My Conclusion:

Predicting network requirements will almost always result in either over-capacity (waste of money) or under-capacity (end users complaining about poor performance).

With DNA, we measure the Real End User Demand over a relevant 7 day period. It is far safer to expect that end users will show the same behavior tomorrow, as we observe today, than to apply an assumption that 4 or so profiles fit all your end users.

My Recommendation:

If your sole objective is to migrate away from Domino, regardless the consequences and as quickly as possible, go ahead and use Microsoft's Network Bandwidth Calculator. You'll love it.

If you prefer to make well-informed, fact based decisions with regards to your platform strategy, based on the Real End User Demand, ask IBM to perform a detailed study on the end user demand in your organization.

Side Note:

I am not an expert in Microsoft Exchange or Outlook. Although theoretically it could be possible that network consumption of outlook users is significantly lower than a typical Notes user, I doubt that this is the case.